Robotic Arm

Hiwonder

Hiwonder LeRobot SO-ARM101 Open-Source 6-Axis Robotic Arm with AI Vision & AI Recognition for Embodied Intelligence Programming

-

【Seamless Hugging Face Integration】The SO-ARM101, an embodied intelligent robotic arm built on the Hugging Face Lerobot project, seamlessly connects to the ecosystem, providing easy access to shared code, templates, and pre-trained models.

-

【End-to-End Imitation Learning】Using the Hugging Face AI framework, the arm collects data through teleoperation for model training, enabling autonomous task execution and environmental adaptation.

-

【Dual-Camera Vision System】Equipped with both a gripper-mounted camera and an external camera, the system supports both precise manipulation and environmental awareness for accurate imitation learning.

-

【High-Performance Servos】Featuring six 30KG high-torque servos with magnetic feedback, the arm delivers smooth, stable motion, eliminating issues like power deficiency and jitter.

-

【Professional Visual PC Software】Integrated with servo scanning, status monitoring, and trajectory control, the BusLinker V3.0 debugging board simplifies device control and debugging.

Hiwonder

Hiwonder ArmPi Ultra ROS2 3D Vision Robot Arm, with Multimodal AI Large Language Models (ChatGPT), AI Voice Interaction, Vision Recognition, Tracking & Sorting

【AI-Driven & Raspberry Pi-Powered】Hiwonder ArmPi Ultra is a ROS robot with 3D vision robot arm developed for STEAM education. It's powered by RPi and compatible with ROS2. With Python and deep learning integrated, ArmPi Ultra is ideal for developing AI projects.

【AI Robotics with High-Performance】 ArmPi Ultra features six intelligent serial bus servos with a torque of 25KG. The robot is equipped with a 3D depth camera, a WonderEcho AI voice box, and integrates Multimodal Large AI Models, enabling a wide variety of applications, such as 3D spatial grabbing, tracking and sorting, scene understanding, and voice control.

【Depth Point Cloud, 3D Scene Flexible Grabbing】ArmPi Ultra is equipped with a high-performance 3D depth camera. Based on the RGB data, position coordinates, and depth information of the target, combined with RGB+D fusion detection, it can realize free grabbing in 3D scenes and other AI projects.

【AI Embodied Intelligence, Human-Robot Interaction】 Hiwonder ArmPi Ultra leverages Multimodal Large AI Models to create an interactive system centered around ChatGPT. Paired with its 3D vision, ArmPi Ultra boasts outstanding perception, reasoning, and action abilities, enabling more advanced embodied AI applications and delivering a natural, intuitive human-robot interaction experience.

【Advanced Technologies & Comprehensive Tutorials】With ArmPi Ultra, you will master a broad range of cutting-edge technologies, including ROS development, 3D depth vision, OpenCV, YOLOv8, MediaPipe, Large AI models, robotic inverse kinematics, MoveIt, Gazebo simulation, and voice interaction. We provide learning materials and video tutorials to guide you step by step, ensuring you can confidently develop your AI-powered robotic arm.

Hiwonder

Hiwonder JetArm Pro ROS1 ROS2 3D Vision Robot, with Multimodal AI Model (ChatGPT), AI Voice and Vision Interaction, Support Chassis, Sliding Rail, Conveyor Belt Add-on

【AI-Driven and Jetson-Powered】JetArm Pro is a high-performance 3D vision robot arm developed for ROS education scenarios. It is equipped with the Jetson Nano, Orin Nano, or Orin NX as the main controller, and is fully compatible with both ROS1 and ROS2. With Python and deep learning frameworks integrated, JetArm Pro is ideal for developing sophisticated AI projects.

【High-Performance AI Robotics & 3D Vision】 JetArm Pro features six high-torque smart bus servos, a 3D depth camera, a 7-inch touchscreen, and a microphone array—bringing together powerful hardware to support a wide range of AI-powered capabilities, including 3D spatial grasping, target tracking, object sorting, voice control, and embodied AI.

【Enhanced Human-Robot Interaction Powered by AI】JetArm Pro leverages Multimodal Large AI Models to create an interactive system centered around ChatGPT. Paired with its 3D vision capabilities, JetArm Pro boasts outstanding perception, reasoning, and action abilities, enabling more advanced embodied AI applications and delivering a natural, intuitive human-robot interaction experience.

【Versatile Multimodal Expansion】With support for Mecanum wheel and tank chassis, electric sliding rail, and conveyor belt, JetArm Pro easily adapts to creative AI applications like intelligent transport, line following, and automated workflows—making it ideal for building advanced, scalable AI scenarios.

【Advanced Technologies & Comprehensive Tutorials】With JetArm Pro, you will master a broad range of cutting-edge technologies, including ROS development, 3D depth vision, OpenCV, YOLOv8, MediaPipe, AI models, robotic inverse kinematics, MoveIt, Gazebo simulation, and voice interaction. We provide in-depth learning materials and video tutorials to guide you step by step, ensuring you can confidently develop your own AI-powered robotic arm.

Hiwonder

Hiwonder LeArm AI Desktop Robot Arm with AI Vision & Voice Interaction, Support Arduino Programming & Sensor Expansion

【ESP32 Controller & Arduino Programming】 LeArm AI is an AI open source robot arm powered by ESP32 and fully compatible with Arduino programming. It features built-in Bluetooth and multiple expansion ports, which maks it ideal for function upgrades and secondary development.

【Inverse Kinematics & Smart Bus Servos】 Equipped with 6 smart bus servos and advanced inverse kinematics algorithm, Hiwonder LeArm AI delivers precise path planning and smooth, efficient movements—perfect for tackling complex tasks.

【Versatile Sensor Expansion】 LeArm supports a wide range of sensors, including AI vision, voice module, ultrasonic, and acceleration sensors. It enables creative applications like color recognition, target tracking, face detection, voice control, and distance measurement.

【Multiple Control Options】 Control LeArm AI with an app, PC software, or wireless controller. It's incredibly easy to get started, requiring no prior experience.

【Open Source Code & Comprehensive Learning Resources】 Comes with 200+ tutorials, sample experiments, open source code, circuit schematics, and well-commented programs—helping users dive into AI and programming while sparking endless creativity.

Hiwonder

Hiwonder xArm AI Desktop Programmable Robot Arm with AI Vision & Voice Interaction, Supports Arduino, Scratch & Python, Sensor Expansion

【3 Flexible Programming Methods】 xArm AI supports Arduino, Scratch, and Python. With xArm AI Robot tutorials, users can easily master AI and programming skills while unlocking their creativity.

【Enhanced AI Interaction】 Equipped with the WonderCam AI vision module and WonderEcho AI voice interaction module, Hiwonder xArm AI enables color recognition, tag tracking, facial recognition, voice broadcasting, and voice control, opening up a world of advanced AI applications.

【Advanced Inverse Kinematics】 Hiwonder xArm AI features an intelligent serial bus servos and an advanced inverse kinematics algorithm, ensuring precise motion planning and smooth execution—even for complex tasks.

【Open for Secondary Development】 Powered by the CoreX Controller, the xArm AI offers multiple ports for servos, motors, and sensors, making it fully compatible with the Hiwonder sensor lineup and ideal for secondary development.

【Arduino Programming, Open Source】 Hiwonder miniArm is built on Atmega328 platform and compatible with Arduino programming. With open source programs, detailed tutorials, and secondary development examples, miniArm is perfect for beginners to easily develop and program your robotic arm.

【High Performance Hardware, Support Sensor Expansion】 miniArm is equipped with a 6-channel knob controller, Bluetooth module, high-precision digital servos, and other hardwares. Moreover, it provides multiple expansion ports for sensor integration, including ESP32 Cam, accelerometer, touch sensor, glowy ultrasonic sensor, etc., empowering users to engage in secondary development for sonic ranging and pose control capabilities.

【Versatile Control Options】 Hiwonder miniArm supports app control, and users can utilize knob potentiometers for real-time knob control and offline action editing.

- 【High-quality AI Education Demonstration System】AiArm is a smart vision robot arm powered by a self-developed controller, CoreX. It adopts high-performance intelligent servos and vision modules and can be programmed in Scratch and Python. With its impressive capabilities, AiArm unlocks diverse AI applications, such as smart vision-guided recognition and grasping.

- 【AI Vision Recognition and Tracking】AiArm combines the WonderCam AI vision module to recognize and locate target objects, enabling the implementation of AI applications like color sorting and waste sorting.

- 【Different Motion Control Methods】Whether it's PC software control, offline control, or inverse kinematics control, you have the versatility to design and edit various actions, unlocking the full potential of the robot arm's capabilities.

- 【Multiple Sensor Expansion】Integrating with sensors and modules, AiArm can execute various interesting functions, including an AI face tracking fan, ultrasonic vision hunt.

Hiwonder

Hiwonder JetArm ROS1/ROS2 3D Vision Robot Arm, with Multimodal AI Model (ChatGPT), AI Voice Interaction and Vision Recognition, Tracking & Sorting

【AI-Driven and Jetson-Powered】 Hiwonder JetArm is a high-performance 3D vision robot arm developed for ROS education scenarios. It is equipped with the Jetson Nano, Orin Nano, or Orin NX as the main controller, and is fully compatible with both ROS1 and ROS2. With Python and deep learning frameworks integrated, JetArm is ideal for developing sophisticated AI projects.

【High-Performance AI Robotics】 Hiwonder JetArm features 6 intelligent serial bus servos with a torque of 35KG. The robot arm is equipped with a 3D depth camera, a built-in 6-microphone array, and Multimodal AI Large Models, enabling a wide variety of applications, such as 3D spatial grabbing, target tracking, object sorting, scene understanding, and voice control.

【Depth Point Cloud, 3D Scene Flexible Grabbing】 JetArm is equipped with a high-performance 3D depth camera. Based on the RGB data, position coordinates and depth information of the target, combined with RGB+D fusion detection, Hiwonder JetArm can realize free grabbing in 3D scene and other AI projects.

【Enhanced Human Robot Interaction Powered by AI】 JetArm leverages Multimodal AI Large Models to create an interactive system centered around ChatGPT. Paired with its 3D vision capabilities, JetArm boasts outstanding perception, reasoning, and action abilities, enabling more advanced embodied AI applications and delivering a natural, intuitive human robot interaction experience.

【Advanced Technologies & STEAM Education Tutorials】 With JetArm, you will master a broad range of cutting-edge technologies, including ROS development, 3D depth vision, OpenCV, YOLOv8, MediaPipe, AI models, robotic inverse kinematics, MoveIt, Gazebo simulation, and voice interaction. We provide in-depth learning materials and video tutorials to guide you step by step, ensuring you can confidently develop your own AI powered robotic arm.

Hiwonder

Hiwonder JetMax Pro JETSON NANO Robot Arm with Mecanum Wheel Chassis/ Electric Sliding Rail Support ROS Python

- Powered by Jetson Nano(included)

- Open source and based on ROS

- Deep learning, model training, inverse kinematics

- Abundant sensors for function expansion

- Changeable robot models with mecanum wheel chassis or sliding rail

Hiwonder

Hiwonder MaxArm Open Source Robot Arm Powered by ESP32 Support Python and Arduino Programming Inverse Kinematics Learning

【Advanced ESP32 microcontrol】 MaxArm is powered by a high-performance ESP32 microcontroller, making it a versatile open source robot arm for learning robot arm programming with both Arduino and Python.

【Linkage Mechanism, Perfect for STEAM Education】 This desktop robot arm features a robust linkage mechanism and integrates with a sliding rail, simulating real-world industrial automation right on your desk.

【AI Vision Module Ready with Hiwonder Ecosystem】 Unlock advanced capabilities as an AI robotic arm. MaxArm is fully compatible with Hiwonder sensors like the WonderCam AI vision module, enabling exciting projects in mask recognition, object sorting, and etc.

【All-in-One STEAM Education Powerhouse】 As the ultimate STEAM education platform, Hiwonder MaxArm offers a complete toolkit to bridge theory and practice in coding, robotics, and AI for all learning levels.

【Multi-Control Flexibility】 Command your programming robotic arm with unparalleled flexibility. Choose from a dedicated mobile app, PC software, a wireless gamepad, or even mouse control for precise operation.

Hiwonder

Hiwonder ArmPi mini 5DOF Vision Robotic Arm Powered by Raspberry Pi Support Python, OpenCV Target Tracking for Beginners

【RPi AI Vision Robot】Hiwonder ArmPi mini is a smart vision robot arm powered by Raspberry Pi. It adopts high-performance intelligent servos and HD camera, and can be programmed in Python. With its impressive capabilities, ArmPi mini RPi unlocks diverse AI applications, such as smart vision-guided recognition and grasping.

【AI Vision Recognition & Tracking】 ArmPi mini robot arm combines a HD camera and OpenCV library to recognize and locate target objects, enabling the implementation of AI applications like color sorting, target tracking and intelligent stacking.

【Inverse Kinematics Algorithm】Hiwonder ArmPi mini employs an inverse kinematics algorithm, enabling precise target tracking and gripping. It also provides complete source code for the inverse kinematics function assisting you in learning AI.

【App Remote Control】 ArmPi mini robot arm offers ultimate remote control with a dedicated app (iOS/ Android) and PC software. In addition, you can access FPV on the app giving you an immersed using experience.

- 【Powered by ESP32 Controller】The xArm-ESP32 open source desktop robotic arm uses the ESP32 open source controller as the main control system and adopts Micro-Python programming. The robotic arm has an expansion interface, and with the sensor expansion package, it can realize intelligent grasping, color sorting and other gameplay.

- 【Supports Multiple Control Methods】The xArm-ESP32 supports three action editing methods: via host computer and mobile phone app, as well as PS2 handle, synchronous teaching device, and other six control methods. Additionally, these robotic arms can be wirelessly controlled via Bluetooth, offering ease of operation and endless enjoyment.

- 【Inverse Kinematics Algorithm】We have provided a comprehensive analysis of the xArm ESP32 inverse kinematics, enabling you to thoroughly understand the operational logic of the robot arm.

- 【Intelligent Bus Servo, Powerful Power Core】The xArm-ESP32 employs intelligent serial bus servos that offer high accuracy and precise data feedback. Its wiring is simplified to reduce friction between the wires and brackets. The base servo has been upgraded to one with a torque of 25KG, enabling the robot arm to lift objects weighing up to 500g while maintaining smooth motion.

Hiwonder

Hiwonder JetMax JETSON NANO Robot Arm ROS Open Source Vision Recognition Programmable Robot

【AI-Driven, NVIDIA Jetson Nano-Powered】 Hiwonder JetMax is an open source AI robotic arm developed based on ROS. It is based on the Jetson Nano control system, supports Python programming, adopts mainstream deep learning frameworks, and can realize a variety of AI applications.

【AI Vision, Deep Learning】 The end of JetMax is equipped with a high-definition camera, which can realize FPV video transmission. Image processing through OpenCV can recognize colors, faces, gestures, etc. Through deep learning, JetMax can realize image recognition and item handling.

【Inverse Kinematics Algorithm】 JetMax uses an inverse kinematics algorithm to accurately track, grab, sort and palletize target items in the field of view. Hiwonder will provide inverse kinematics analysis courses, connected coordinate system DH model and inverse kinematics function source code.

【Multiple Expansion Methods】 You can purchase additional McNamee wheel chassis or slide rails to expand your JetMax, expand the range of motion of JetMax, and do more interesting AI projects.

【Detailed Course Materials and Professional After-sales Service】 We provide 200+ courses and provide online technical assistance to help you learn JetMax more efficiently! Note: Hiwonder only provides technical assistance for existing courses, and more in-depth development needs to be completed by customers themselves.

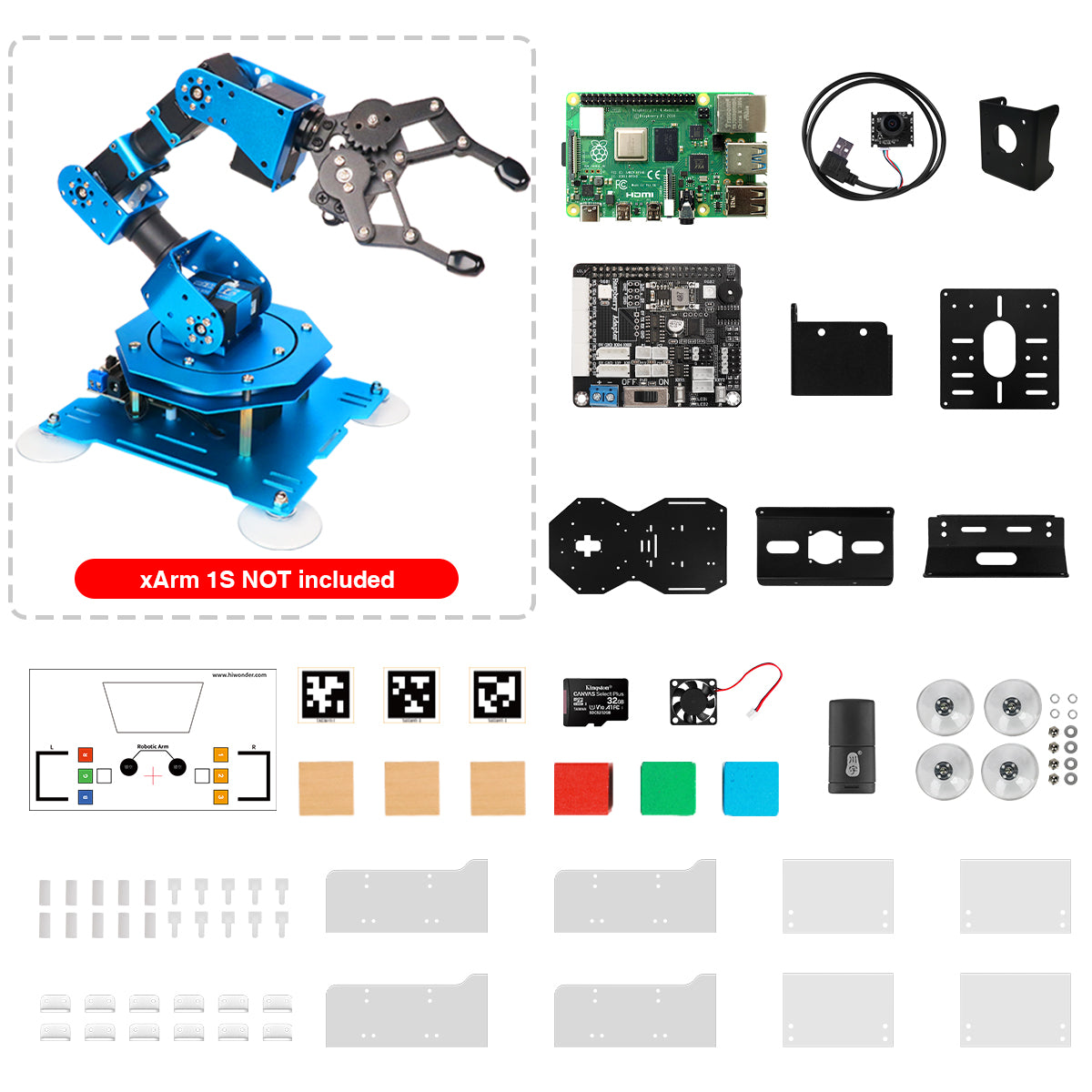

- Raspberry Pi 4B is included

- Raspberry Pi extension kit ONLY, robot arm is NOT included

- Upgrade xArm 1S robotic arm to AI vision function

- Provide Python source code and detailed tutorials

- Support PC software, phone App and VNC control

- HD camera, built-in ROS with MoveIt! and inverse kinematics

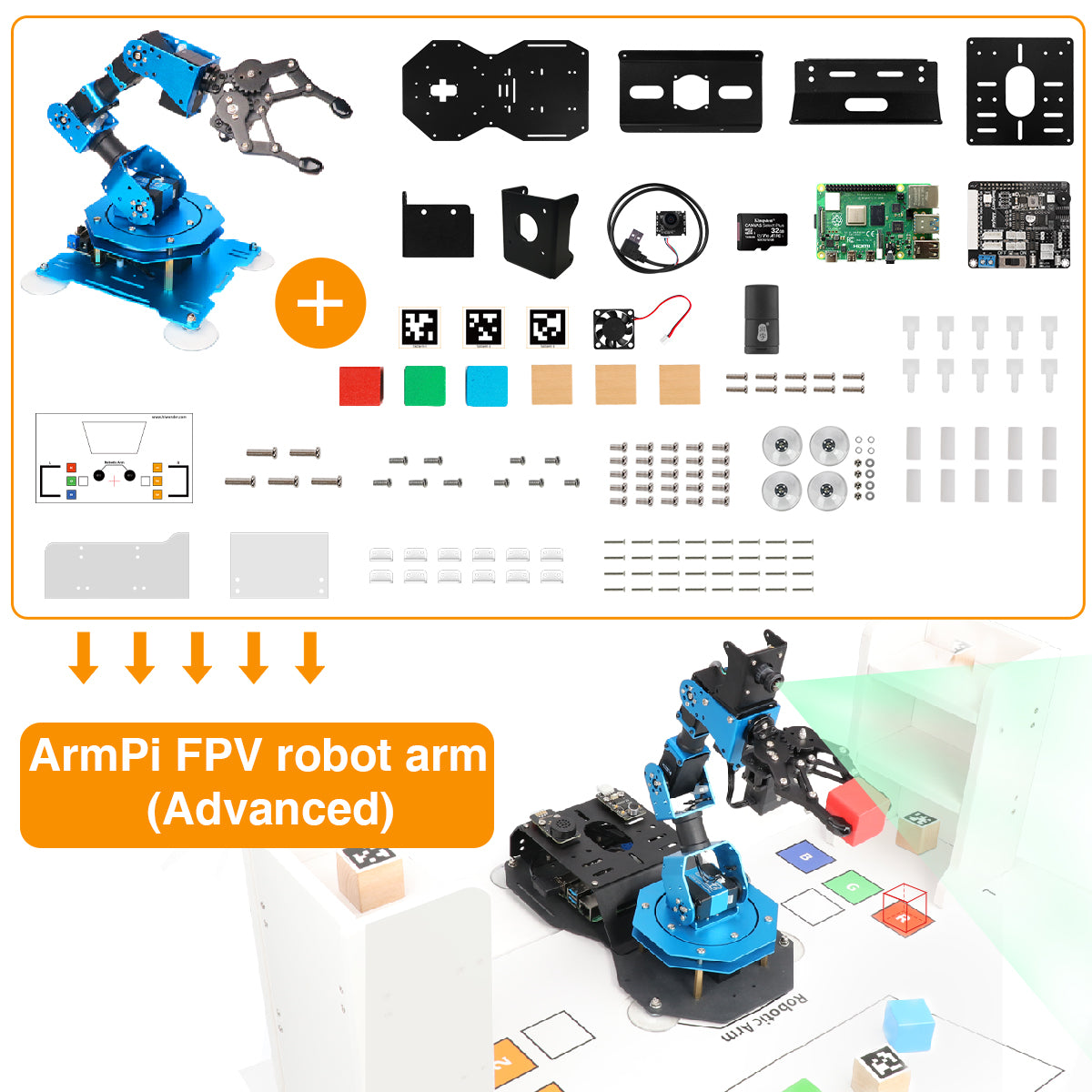

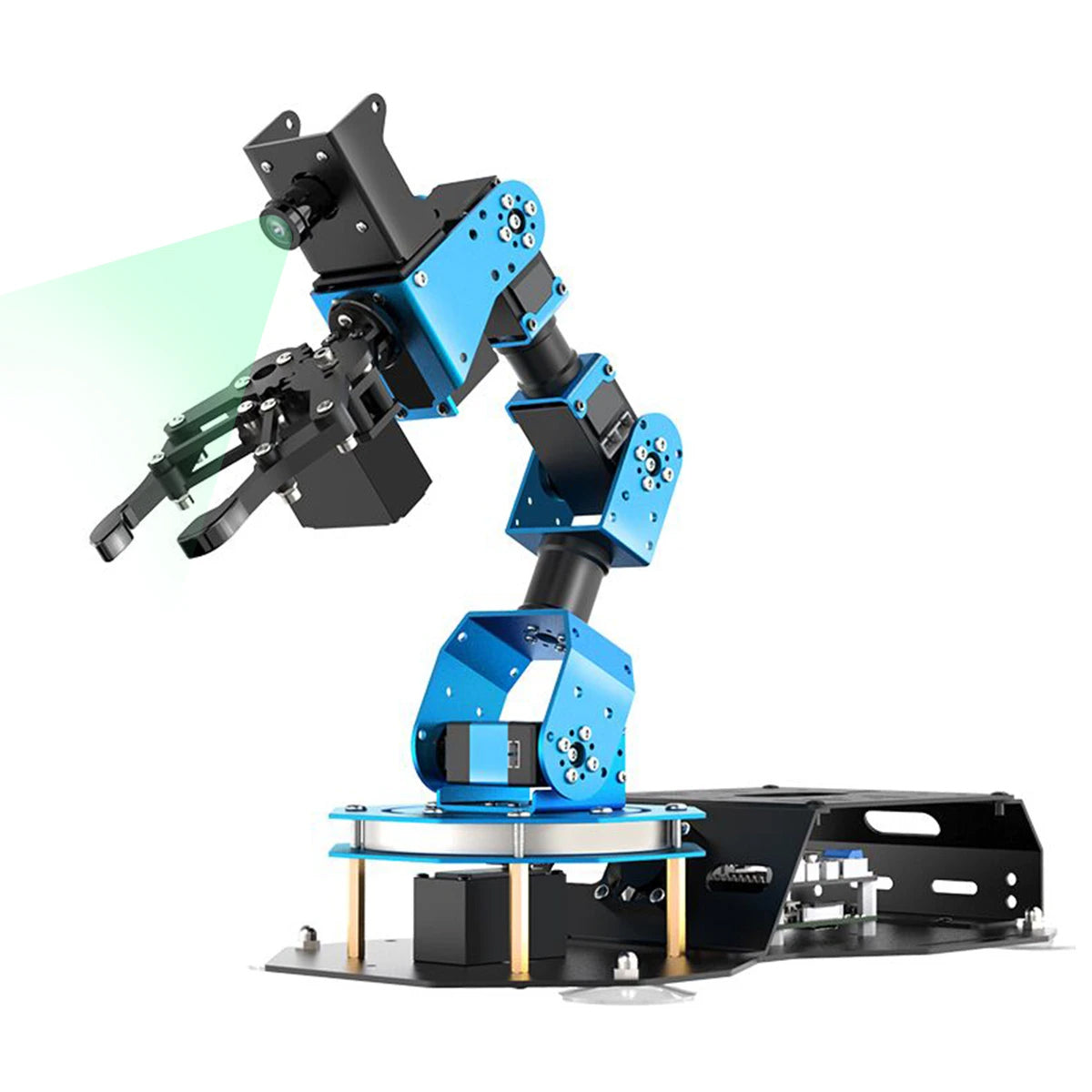

【ROS Robotic Arm with Raspberry Pi】 ArmPi FPV is an open-source AI robot arm based on Robot Operating System and powered by Raspberry Pi. Loaded with high-performance intelligent servos and AI camera, and programmable using Python, it is capable of vision recognition and gripping.

【Abundant AI Applications】 Guided by artificial intelligence vision, ArmPi FPV excels in executing functions such as stocking in, stocking out, and stock transfer, realizing integration into Industry 4.0 environments.

【Inverse Kinematics Algorithm】 ArmPi FPV employs an inverse kinematics algorithm, enabling precise target tracking and gripping within. It also provides detailed analysis on inverse kinematics, DH model, and offers the source code for the inverse kinematics function.

【Robot Control Across Platforms】 ArmPi FPV provides multiple control methods, like WonderPi app (compatible with iOS and Android system), wireless handle, mouse, PC software and Robot Operating System, allowing you to control the robot at will.

【Abundant AI Applications】 Guided by intelligent vision, ArmPi FPV excels in executing functions such as stocking in, stocking out, and stock transfer, realizing integration into Industry 4.0 environments.

- 【xArm-UNO Unlimited Creativity】xArm UNO consists of the xArm 1S robot arm and a secondary sensor development kit. The xArm 1S is a high-quality desktop robot arm, while the sensor kit includes features such as a glowy ultrasonic sensor, color sensor, touch sensor, and more. By integrating these sensors with the robot arm, users can engage in various activities such as distance-based gripping, color sorting, sound control, and other interesting games.

- 【Equipped with UNO R3, Support Arduino Programming】The kit includes UNO R3 and the UNO R3 expansion board, supporting Arduino programming. Detailed Arduino codes are provided, allowing you to implement a variety of creative functions.

- 【Diverse Control Options】xArm-UNO supports control via PC, app, mouse, and a wireless handle. These features offer a wide range of access points for gaming and learning methods, making it highly suitable for innovative programming ideas and programming learning.

- 【High-Quality Robot Arm】xArm 1S robot arm is constructed from aluminum alloy, equipped with industry-grade bearings, and features 6 high-accuracy and high-torque serial bus servos that provide feedback on position, voltage, and temperature.