JetAuto: Build Robotic SLAM Function with Lidar and Depth Camera

Far beyond driving! Loaded with Lidar, depth camera, 6-microphone array and LCD screen, JetAuto possesses speech and sense of sound and sight. With these abilities, what can this robot do?

Based on multi-machine communication, JetAuto cars can be controlled to move in a fixed formation, like row, column and triangle. No matter how you interfere them, none will drop out and they will always maintain the formation.

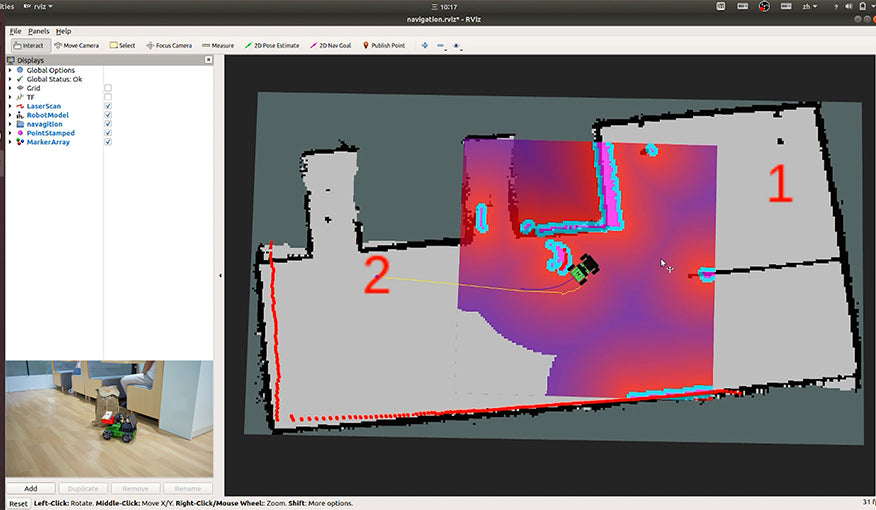

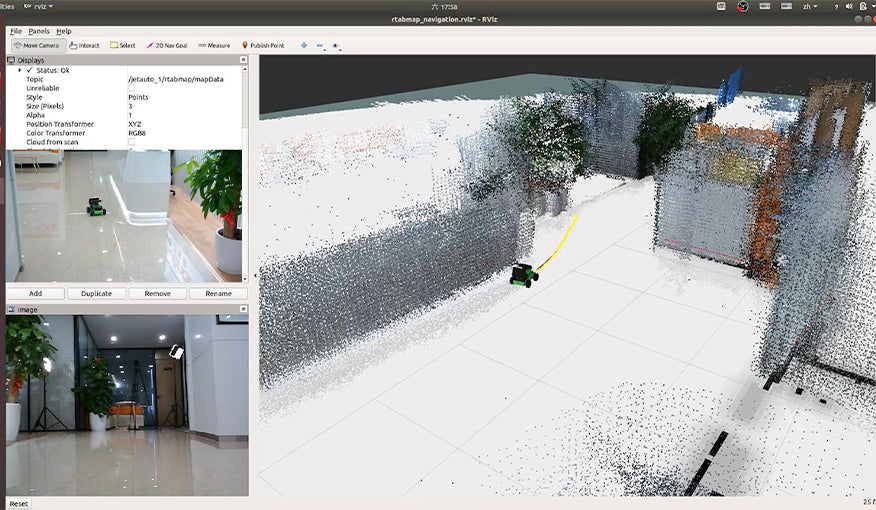

Lidar and depth camera function as JetAuto's "eye" enabling it to map and navigate in an unknown environment. Lidar will construct 2D map first, then navigate JetAuto to designated points on the basis of the 2D map. Different from Lidar, depth camera can obtain the coordinates of each point in the image and reconstruct the 3D data to implement 3D mapping and navigation. Lidar together with depth camera render fabulous sense of direction to JetAuto.

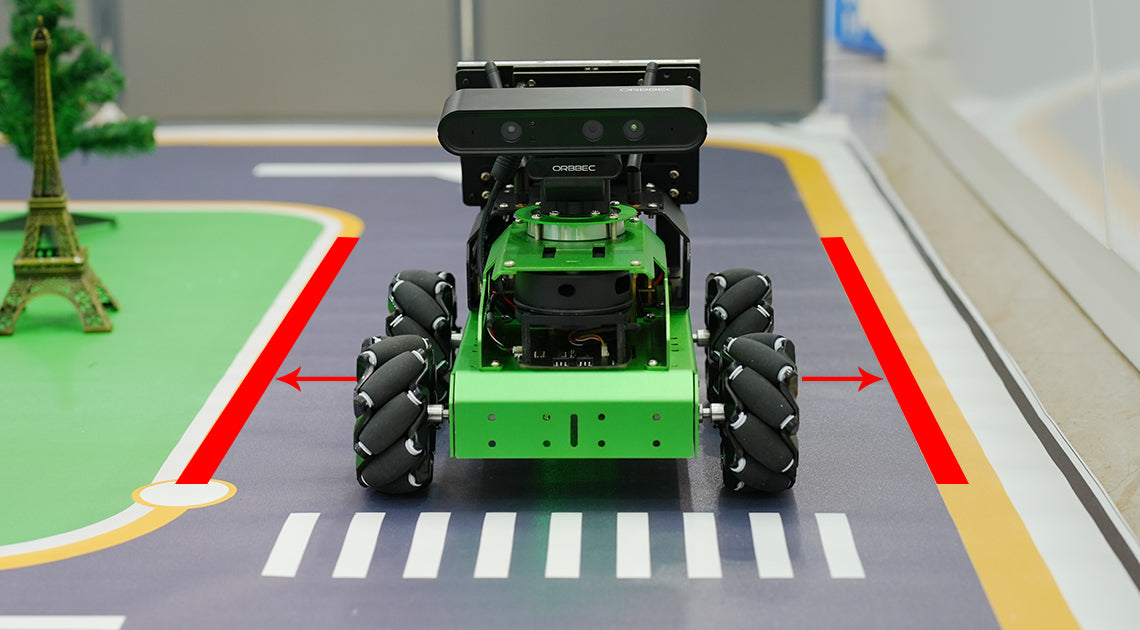

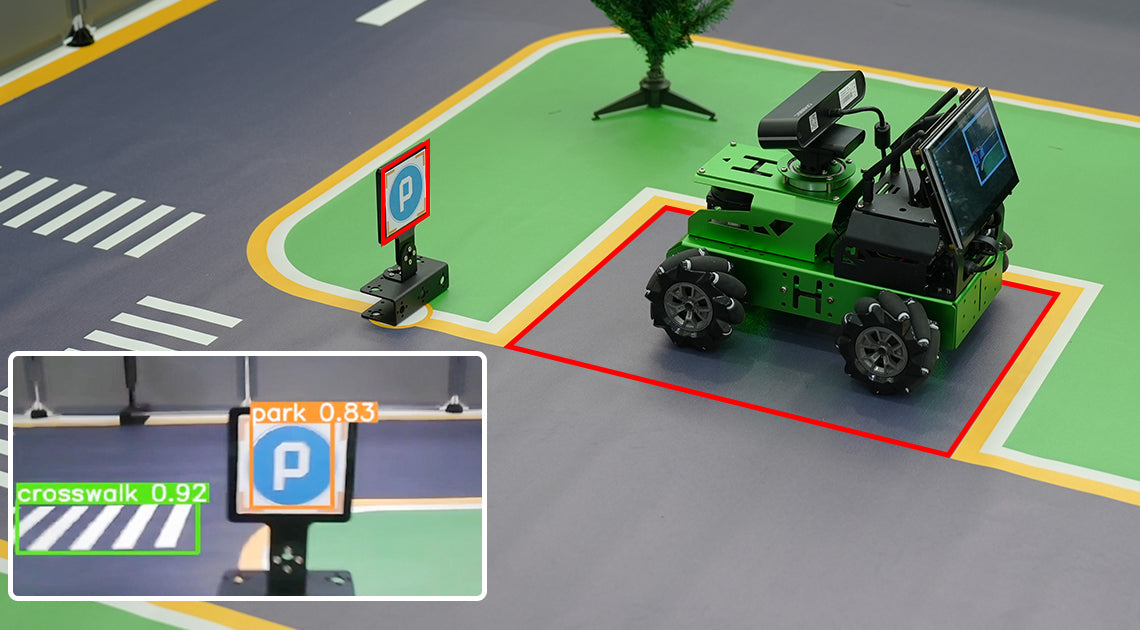

Through training the deep learning model library, JetAuto can realize autonomous driving. It can recognize the lanes, road signs and traffic lights to estimate the traffic and decide whether to turn, which acts as a "Decision Making" system of a real autonomous car. Furthermore, it can steer itself from a traffic lane into a parking spot when recognizing the parking sign.

JetAuto is armed with a 6-microphone array module to make it a robot capable of understanding spoken command and interacting with people. Having received your command, it will take action immediately. For example, you can order it to navigate to the place you want.