Enjoy 10% OFF everything Use code: Lucky2026

ROS Robot

Hiwonder

Hiwonder JetAuto Pro AI Robot Car with 6DOF Vision Robotic Arm, Support ROS1 ROS2, with Large AI Model (ChatGPT), SLAM Mapping/Navigation, AI Voice Interaction, Intelligent Sorting

【Smart ROS Robots Driven by AI】 JetAuto Pro is a professional robotic platform for ROS learning and development, powered by NVIDIA Jetson / Raspberry Pi 5, and supports Robot Operating System (ROS2 & ROS1). It leverages mainstream deep learning frameworks, incorporates MediaPipe development, and enables YOLO model training.

【SLAM Development and Diverse Configuration】 JetAuto Pro is equipped with a powerful combination of a 3D depth camera and Lidar. It utilizes a wide range of advanced algorithms, including gmapping, Hector, Karto, and Cartographer, enabling precise multi-point navigation, TEB path planning, and dynamic obstacle avoidance.

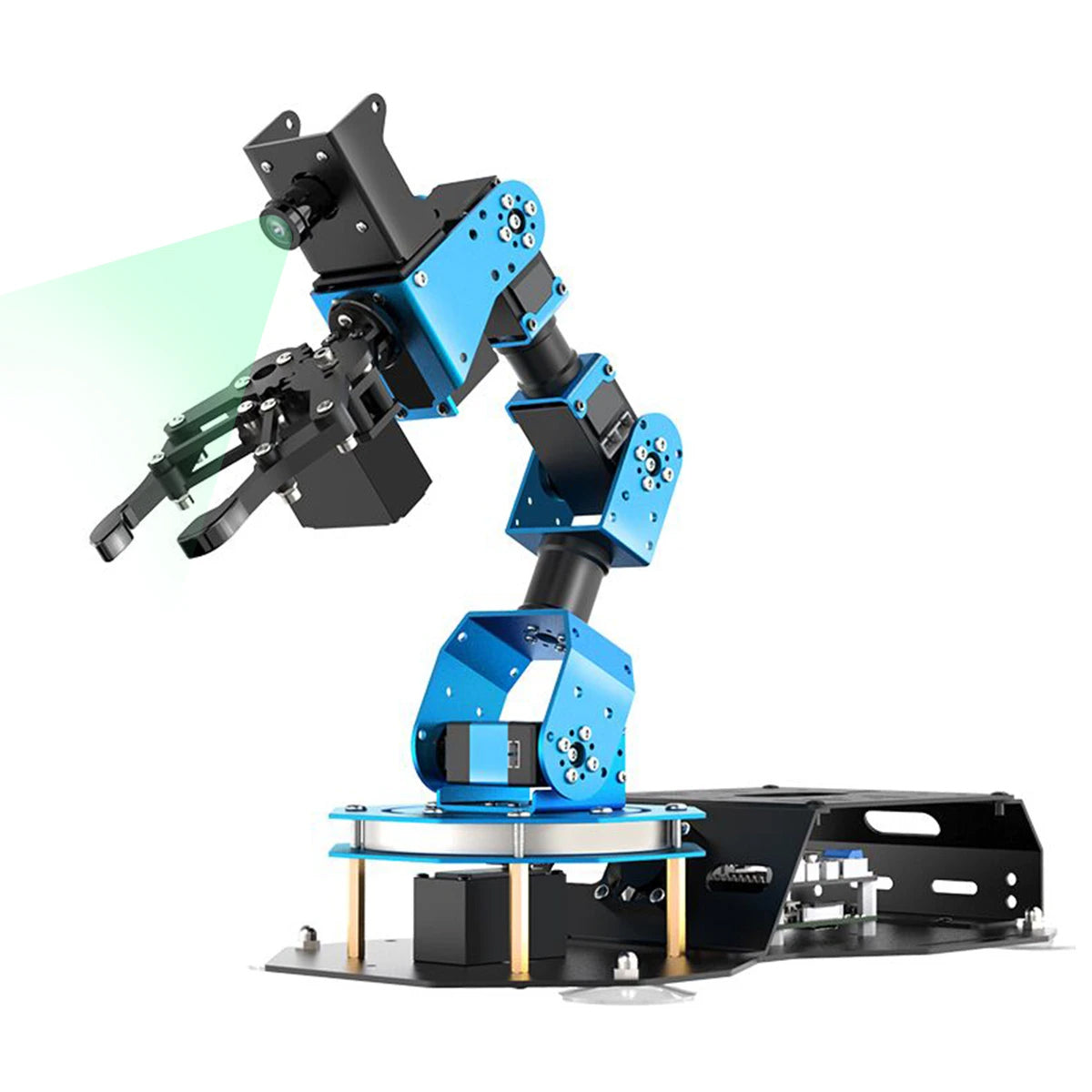

【 High-Performance Vision Robot Arm】 JetAuto Pro features a 6DOF vision robot arm, equipped with intelligent serial bus servos that deliver a torque of 35 kg. An HD camera is positioned at the end of robot arm, which provides a first-person perspective for object gripping tasks.

【Far-field Voice Interaction】 JetAuto Pro advanced kit incorporates a 6-microphone array and speaker allowing for man-robot interaction applications, including Text to Speech conversion, 360° sound source localization, voice-controlled mapping navigation, etc. Integrated with vision robot arm, JetAuto Pro can implement voice-controlled gripping and transporting.

【Robot Control Across Platforms】 JetAuto Pro provides multiple control methods, like WonderAi app (compatible with iOS and Android systems), wireless controller, Robot Operating System (ROS) and keyboard, allowing you to control the robot at will.

【Empowered by Large Al Model, Human-Robot Interaction Redefined】 JetAuto Pro deploys AI multimodal models with ChatGPT at its core, integrating 3D vision and a 6-microphone array. This synergy enhances its perception, reasoning, and actuation capabilities, enabling advanced embodied AI applications and delivering natural, context-aware human-robot interaction.

Hiwonder

Hiwonder JetRover JETSON Robot Car with AI Vision Robotic Arm, Supports ROS1 & ROS2, with AI Large Model (ChatGPT), SLAM Mapping/Navigation, AI Voice Interaction, Intelligent Sorting

【Supports ROS1 and ROS2 configuration】Hiwonder JetRover is compatible with ROS1 & ROS2. The main control solution has been upgraded to support 3 main control solutions: Raspberry Pi 5, Jetson Nano, and Jetson Orin Nano. Users can choose the adaptation solution according to their own needs.

【Smart ROS AI Robots】 JetRover is a professional robotic platform for ROS learning and development, powered by NVIDIA Jetson Nano and supports Robot Operating System (ROS). It leverages mainstream deep learning frameworks, incorporates MediaPipe development, and enables YOLO model training.

【SLAM Development and Diverse Configuration】Hiwonder JetRover is equipped with a powerful combination of a 3D depth camera and Lidar. It utilizes a wide range of advanced algorithms including gmapping, hector, karto and cartographer, enabling precise multi-point navigation, TEB path planning, and dynamic obstacle avoidance.

【AI Vision Robotic Arm】JetRover robot car includes a 6DOF vision robot arm, featuring intelligent serial bus servos with a torque of 35KG. A HD camera is positioned at the end of robot arm, which provides a FPV for object grabbing tasks.

【Empowered by Al Large Model, Human Robot Interaction Redefined】 JetRover deploys multimodal models with ChatGPT at its core, integrating 3D vision and a 6-microphone array. This synergy enhances its perception, reasoning, and actuation capabilities, enabling advanced embodied AI applications and delivering natural, context-aware human robot interaction.

【Robot Control Across Platforms】 Hiwonder JetRover provides multiple control methods, like WonderAi app (compatible with iOS and Android system), wireless handle, Robot Operating System (ROS) and keyboard, allowing you to control the robot car at will.

Hiwonder

Hiwonder JetAuto AI Robot Kit, NVIDIA Jetson Powered ROS1/ROS2 Educational Robot with multimodal AI model (ChatGPT), Voice Control, SLAM & AI Vision

【Driven by Al, powered by Jetson】 JetAuto is a high-performance educational robot developed for ROS learning scenarios. Equipped with Jetson Nano/Orin Nano/Orin NX controllers and compatible with both ROS1 and ROS2, Hiwonder JetAuto integrates deep learning frameworks with TensorRT acceleration, making it ideal for advanced Al applications such as SLAM and vision recognition.

【SLAM Development and Diverse Configuration】 JetAuto is equipped with a powerful combination of a 3D depth camera and Lidar. It utilizes a wide range of advanced algorithms including gmapping, hector, karto, cartographer and RRT, enabling precise multi-point navigation, TEB path planning, and dynamic obstacle avoidance. Using 3D vision, JetAuto Al vision robot can capture point cloud images of the environment to achieve RTAB 3D mapping navigation.

【Empowered by Large Al Model, Human-Robot Interaction Redefined】 JetAuto AI Robot deploys multimodal models with ChatGPT at its core, integrating 3D vision and a 6-microphone array. This synergy enhances its perception, reasoning, and actuation capabilities, enabling advanced embodied AI applications and delivering natural, context-aware human-robot interaction.

【Robot Control Across Platforms】 Hiwonder JetAuto provides multiple control methods, like WonderAi app (compatible with iOS and Android system), wireless handle and keyboard, allowing you to control the ROS robot at will. By importing corresponding codes, you can command JetAuto to perform specific actions.

【STEAM Education Tutorials】 JetAuto's structured curriculum provides master cutting-edge technologies including ROS development, SLAM mapping and navigation, 3D depth vision, OpenCV, YOLOv8, MediaPipe, Large Al model integration, Movelt and Gazebo simulation, and voice interaction.

Supported by extensive documentation and video tutorials, JetAuto progressive learning system breaks down complex concepts into digestible modules, guiding you from fundamentals to advanced implementations-empowering you to build your own intelligent robotic systems.

Hiwonder

Hiwonder ArmPi Pro Raspberry Pi 5 ROS Robotic Arm Developer Kit with 4WD Mecanum Wheel Chassis Open Source Robot Car

【Omni-Directional Movement, FPOV】 The chassis is equipped with 4 high-performance encoder geared motors and 4 omni-directional mecanum wheels. ArmPi Pro can realize 360° movement. Combined with HD camera ending in robot arm, it can provide first person point of view.

【Powerful Control System】 RaspberryPi 4B/5 makes breakthrough in processor speed, multimedia performance, memory and connection. The combination of RaspberryPi 4B/5 and RaspberryPi expansion board significantly enhances ArmPi Pro's AI performance!

【AI Vision Recognition, Target Tracking】 ArmPi Pro takes OpenCV as image processing library and utilizes FPV camera to recognize and locate the target block so as to realize color sorting, target tracking, line following, and other AI games.

【APP Control, FPV Transmitted Image】 Android and iOS APP are available for robot remote control. Via the APP, you can control the robot in real time and switch various AI games just by one tap.

Hiwonder

Hiwonder JetHexa ROS Hexapod Robot Kit Powered by Jetson Nano with Lidar Depth Camera Support SLAM Mapping and Navigation

【Powered by NVIDIA Jetson Nano】 JetHexa is a hexapod robot powered by NVIDIA Jetson Nano B01 and supports Robot Operating System (ROS). It leverages mainstream deep learning frameworks, incorporates MediaPipe development, enables YOLO model training, and utilizes TensorRT acceleration.

【SLAM Development and AI Application】 Equipped with a 3D depth camera and Lidar, it achieves precise 2D mapping, multi-point navigation, TEB path planning, Lidar tracking, and dynamic obstacle avoidance. Using 3D vision, it can capture point cloud images of the environment to achieve RTAB 3D mapping navigation.

【Inverse Kinematics Algorithm】 JetHexa can switch between tripod gait and ripple gait flexibly. It employs an inverse kinematics algorithm, allowing it to perform "moonwalking" with fixed speed and height. Furthermore, JetHexa allows for adjustable pitch angle, roll angle, direction, speed, height, and stride, giving you complete control over its movements. With self-balancing function, JetHexa can conquer complex terrains with ease.

【Robot Control Across Platforms】 Hiwonder JetHexa provides multiple control methods, like WonderAi app (compatible with iOS and Android system), wireless handle, Robot Operating System (ROS) and keyboard, allowing you to control the robot at will. By importing corresponding codes, you can command JetHexa to perform specific actions.

Hiwonder

Hiwonder PuppyPi ROS Quadruped Robot with Raspberry Pi, Integrated with Large AI Model (ChatGPT), Supports AI Vision, Voice Interaction, LiDAR, and Robotic Arm Attachment

【Raspberry Pi & ROS1/ROS2 Compatible】 PuppyPi is an AI vision robot dog built for education and development. Powered by a Raspberry Pi 5 and fully compatible with ROS1 and ROS2, it enables efficient AI computing and versatile robotics projects through Python. Complete source code and documentation included to help you build and customize your own intelligent robot dog.

【Multimodal AI & Enhanced Human Robot Interaction】 PuppyPi integrates a multimodal model, featuring ChatGPT at its core for advanced human-robot interaction. Enhanced with AI vision, it delivers improved perception, reasoning, and action, creating a more natural and flexible interaction experience!

【High-Torque Smart Servos & Inverse Kinematics】 PuppyPi is equipped with 8 high-torque stainless steel gear servos, offering faster response times and stable output. The robot's legs use a link structure design combined with inverse kinematics algorithms to enable coordinated multi-joint movement and precise motion control.

【AI Vision Recognition & Tracking】 PuppyPi features a high-definition camera that enables a variety of AI vision capabilities, including color recognition, target tracking, face detection, ball kicking, line following, and MediaPipe gesture control.

【Lidar & Robotic Arm Expansion】 PuppyPi quadruped robot supports TOF Lidar and robotic arm expansion. It can perform 360° environmental scanning, SLAM navigation, and dynamic obstacle avoidance. Additionally, Hiwonder PuppyPi can precisely grasp objects, opening up opportunities for advanced AI applications.

Hiwonder

Hiwonder JetMax JETSON NANO Robot Arm ROS Open Source Vision Recognition Programmable Robot

【AI-Driven, NVIDIA Jetson Nano-Powered】 Hiwonder JetMax is an open source AI robotic arm developed based on ROS. It is based on the Jetson Nano control system, supports Python programming, adopts mainstream deep learning frameworks, and can realize a variety of AI applications.

【AI Vision, Deep Learning】 The end of JetMax is equipped with a high-definition camera, which can realize FPV video transmission. Image processing through OpenCV can recognize colors, faces, gestures, etc. Through deep learning, JetMax can realize image recognition and item handling.

【Inverse Kinematics Algorithm】 JetMax uses an inverse kinematics algorithm to accurately track, grab, sort and palletize target items in the field of view. Hiwonder will provide inverse kinematics analysis courses, connected coordinate system DH model and inverse kinematics function source code.

【Multiple Expansion Methods】 You can purchase additional McNamee wheel chassis or slide rails to expand your JetMax, expand the range of motion of JetMax, and do more interesting AI projects.

【Detailed Course Materials and Professional After-sales Service】 We provide 200+ courses and provide online technical assistance to help you learn JetMax more efficiently! Note: Hiwonder only provides technical assistance for existing courses, and more in-depth development needs to be completed by customers themselves.

【ROS Robotic Arm with Raspberry Pi】 ArmPi FPV is an open-source AI robot arm based on Robot Operating System and powered by Raspberry Pi. Loaded with high-performance intelligent servos and AI camera, and programmable using Python, it is capable of vision recognition and gripping.

【Abundant AI Applications】 Guided by artificial intelligence vision, ArmPi FPV excels in executing functions such as stocking in, stocking out, and stock transfer, realizing integration into Industry 4.0 environments.

【Inverse Kinematics Algorithm】 ArmPi FPV employs an inverse kinematics algorithm, enabling precise target tracking and gripping within. It also provides detailed analysis on inverse kinematics, DH model, and offers the source code for the inverse kinematics function.

【Robot Control Across Platforms】 ArmPi FPV provides multiple control methods, like WonderPi app (compatible with iOS and Android system), wireless handle, mouse, PC software and Robot Operating System, allowing you to control the robot at will.

【Abundant AI Applications】 Guided by intelligent vision, ArmPi FPV excels in executing functions such as stocking in, stocking out, and stock transfer, realizing integration into Industry 4.0 environments.