Black Friday

Hiwonder

Hiwonder ArmPi Ultra ROS2 3D Vision Robot Arm, with Multimodal AI Large Language Models (ChatGPT), AI Voice Interaction, Vision Recognition, Tracking & Sorting

【AI-Driven & Raspberry Pi-Powered】Hiwonder ArmPi Ultra is a ROS robot with 3D vision robot arm developed for STEAM education. It's powered by RPi and compatible with ROS2. With Python and deep learning integrated, ArmPi Ultra is ideal for developing AI projects.

【AI Robotics with High-Performance】 ArmPi Ultra features six intelligent serial bus servos with a torque of 25KG. The robot is equipped with a 3D depth camera, a WonderEcho AI voice box, and integrates Multimodal Large AI Models, enabling a wide variety of applications, such as 3D spatial grabbing, tracking and sorting, scene understanding, and voice control.

【Depth Point Cloud, 3D Scene Flexible Grabbing】ArmPi Ultra is equipped with a high-performance 3D depth camera. Based on the RGB data, position coordinates, and depth information of the target, combined with RGB+D fusion detection, it can realize free grabbing in 3D scenes and other AI projects.

【AI Embodied Intelligence, Human-Robot Interaction】 Hiwonder ArmPi Ultra leverages Multimodal Large AI Models to create an interactive system centered around ChatGPT. Paired with its 3D vision, ArmPi Ultra boasts outstanding perception, reasoning, and action abilities, enabling more advanced embodied AI applications and delivering a natural, intuitive human-robot interaction experience.

【Advanced Technologies & Comprehensive Tutorials】With ArmPi Ultra, you will master a broad range of cutting-edge technologies, including ROS development, 3D depth vision, OpenCV, YOLOv8, MediaPipe, Large AI models, robotic inverse kinematics, MoveIt, Gazebo simulation, and voice interaction. We provide learning materials and video tutorials to guide you step by step, ensuring you can confidently develop your AI-powered robotic arm.

Hiwonder

Hiwonder JetAuto Pro AI Robot Car with 6DOF Vision Robotic Arm, Support ROS1 ROS2, with Large AI Model (ChatGPT), SLAM Mapping/Navigation, AI Voice Interaction, Intelligent Sorting

【Smart ROS Robots Driven by AI】 JetAuto Pro is a professional robotic platform for ROS learning and development, powered by NVIDIA Jetson / Raspberry Pi 5, and supports Robot Operating System (ROS2 & ROS1). It leverages mainstream deep learning frameworks, incorporates MediaPipe development, and enables YOLO model training.

【SLAM Development and Diverse Configuration】 JetAuto Pro is equipped with a powerful combination of a 3D depth camera and Lidar. It utilizes a wide range of advanced algorithms, including gmapping, Hector, Karto, and Cartographer, enabling precise multi-point navigation, TEB path planning, and dynamic obstacle avoidance.

【 High-Performance Vision Robot Arm】 JetAuto Pro features a 6DOF vision robot arm, equipped with intelligent serial bus servos that deliver a torque of 35 kg. An HD camera is positioned at the end of robot arm, which provides a first-person perspective for object gripping tasks.

【Far-field Voice Interaction】 JetAuto Pro advanced kit incorporates a 6-microphone array and speaker allowing for man-robot interaction applications, including Text to Speech conversion, 360° sound source localization, voice-controlled mapping navigation, etc. Integrated with vision robot arm, JetAuto Pro can implement voice-controlled gripping and transporting.

【Robot Control Across Platforms】 JetAuto Pro provides multiple control methods, like WonderAi app (compatible with iOS and Android systems), wireless controller, Robot Operating System (ROS) and keyboard, allowing you to control the robot at will.

【Empowered by Large Al Model, Human-Robot Interaction Redefined】 JetAuto Pro deploys AI multimodal models with ChatGPT at its core, integrating 3D vision and a 6-microphone array. This synergy enhances its perception, reasoning, and actuation capabilities, enabling advanced embodied AI applications and delivering natural, context-aware human-robot interaction.

Hiwonder

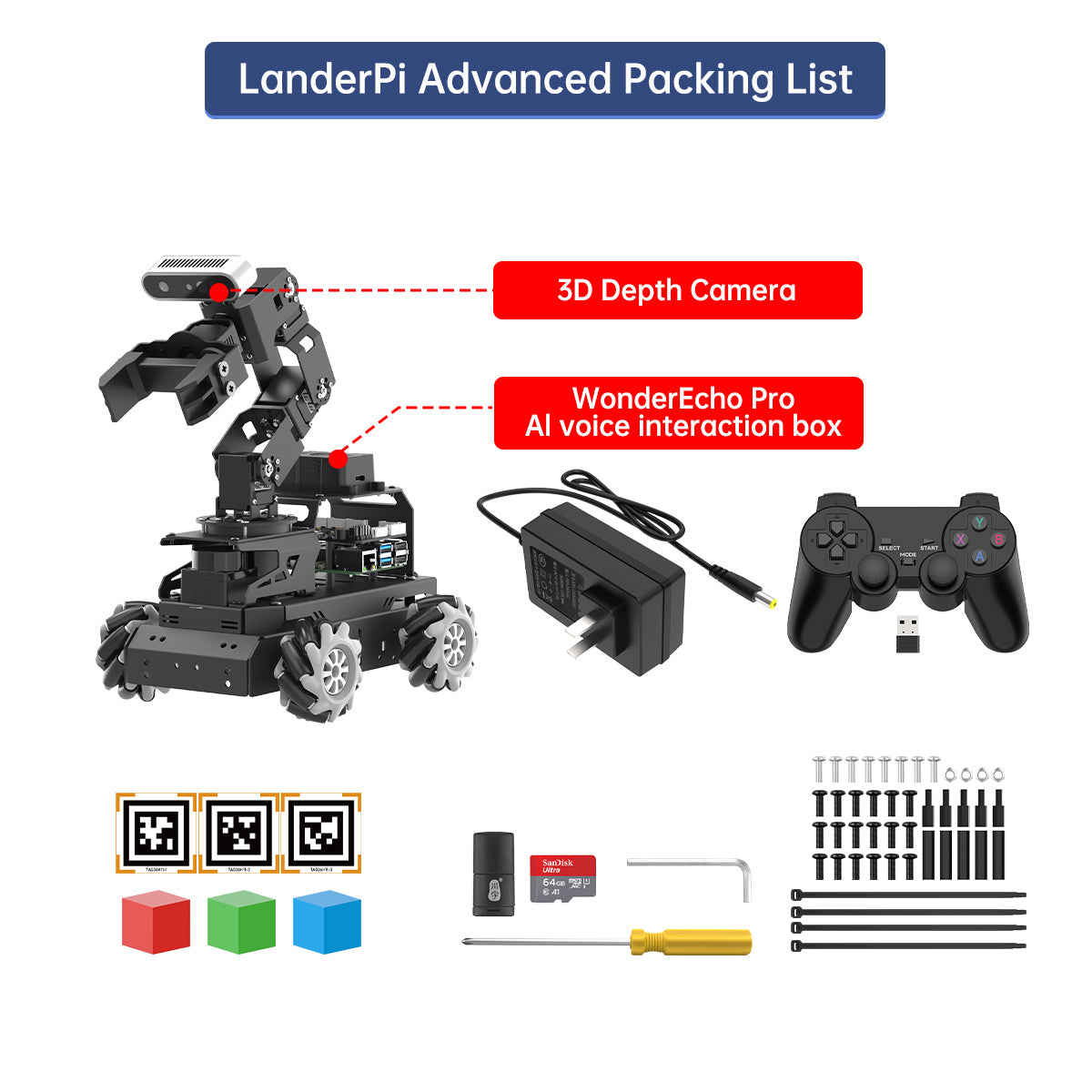

Hiwonder LanderPi Raspberry Pi Robot Car with AI Vision Robotic Arm, Support ROS2, Large AI Model (ChatGPT), SLAM Mapping/Navigation, AI Voice Interaction, Intelligent Sorting

【Raspberry Pi 5 & ROS2 Robot Car】 LanderPi is powered by Raspberry Pi 5, compatible with ROS2, and programmed in Python, making it an ideal platform for AI robot development.

【Multiple Chassis Configurations】 LanderPi robot supports Mecanum-wheel, Ackermann chassis, and tank chassis, allowing flexibility for various applications and meeting diverse user needs.

【High-Performance Hardware】Equipped with DC gear encoder motors, TOF lidar, 3D depth camera, 6DOF Robotic Arm, and other advanced components to ensure optimal performance and efficiency.

【AI Advanced AI Capabilities】 LanderPi Raspberry Pi car supports SLAM mapping, path planning, multi-robot coordination, vision recognition, target tracking, and more, covering a wide range of AI applications.

【Autonomous Driving with Deep Learning】Utilizes the YOLOv8 model training to enable road sign and traffic light recognition, along with other autonomous driving features, helping users explore and develop autonomous driving technologies.

【Empowered by Large AI Model, Human-Robot Interaction Redefined】LanderPi deploys multimodal models with ChatGPT at its core, integrating 3D vision robotic arm and AI voice interaction box. This synergy enhances its perception, reasoning, and actuation capabilities, enabling advanced embodied AI applications and delivering natural, context-aware human-robot interaction.

Hiwonder

Hiwonder MentorPi M1 Raspberry Pi Robot Car – Mecanum wheel ROS2 Robot, with LLM ChatGPT, SLAM and AI Vision and Voice Interaction

【Raspberry Pi 5 & ROS2】Powered by RPi 5 and compatible with ROS2, MentorPi car is an ideal platform for developing AI robots. The system is fully programmable in Python, making it accessible for a wide range of projects.

【Choose Your Chassis】 Whether you need the precision of an Ackermann chassis or the maneuverability of Mecanum wheels, MentorPi raspi car has you covered. Its flexible design lets you switch between configurations to fit your unique needs.

【Top-Tier Hardware Inside】 Packed with high-performance components like closed-loop encoder motors, a TOF lidar, a 3D depth camera, and high-torque servos, MentorPi RPi robot delivers speed and accuracy.

【Advanced AI】 From SLAM mapping and path planning to multi-robot coordination and vision recognition, MentorPi robot supports a wide range of AI applications.

【Autonomous Driving】 Utilize YOLOv5 model training to enable advanced autonomous driving features, including road sign and traffic light recognition. This provides an excellent platform for learning and developing autonomous driving.

【Advanced Interaction】 Hiwonder MentorPi deploys multimodal models with ChatGPT at its core. By integrating a 3D vision system and an AI voice interaction box, the robot gains enhanced perception, reasoning, and actuation. This enables advanced embodied AI applications and delivers natural interaction.

Hiwonder

Hiwonder miniHexa AI Hexapod Robot with AI Vision & Voice Interaction, Support Arduino Programming & Sensor Expansion

【ESP32 Controller & Arduino Programming】 Hiwonder miniHexa is an open-source AI hexapod robot powered by ESP32 and fully compatible with Arduino programming. It features precise motion control and multiple expansion ports, making it ideal for function upgrades and secondary development.

【18DOF Structure, High-Speed Micro Servos】 miniHexa is equipped with 18 anti-stall micro servos—compact in size, fast in response, low-noise, and highly precise—delivering powerful, accurate control for executing complex movements with ease.

【Inverse Kinematics & Flexible Movement】 Utilizing cutting-edge inverse kinematics algorithms, miniHexa supports 360° omnidirectional motion. It effortlessly switches between postures, angles, and balance, enabling smooth and seamless action combinations.

【Versatile Sensor Expansion】 miniHexa supports a wide range of sensors, including Al vision, voice module, ultrasonic, touch sensor, matrix display, and more. It enables creative applications like color recognition, target tracking, face detection, voice control, and distance measurement.

【Comprehensive Learning Resources & Open Source】 Comes with more than 200 tutorials, open-source code, circuit schematics, and well-commented programs, helping users dive into AI and programming while sparking endless creativity.

Hiwonder

Hiwonder PuppyPi ROS Quadruped Robot with Raspberry Pi, Integrated with Large AI Model (ChatGPT), Supports AI Vision, Voice Interaction, LiDAR, and Robotic Arm Attachment

【Raspberry Pi & ROS1/ROS2 Compatible】 PuppyPi is an AI vision robot dog built for education and development. Powered by a Raspberry Pi 5 and fully compatible with ROS1 and ROS2, it enables efficient AI computing and versatile robotics projects through Python. Complete source code and documentation included to help you build and customize your own intelligent robot dog.

【Multimodal AI & Enhanced Human Robot Interaction】 PuppyPi integrates a multimodal model, featuring ChatGPT at its core for advanced human-robot interaction. Enhanced with AI vision, it delivers improved perception, reasoning, and action, creating a more natural and flexible interaction experience!

【High-Torque Smart Servos & Inverse Kinematics】 PuppyPi is equipped with 8 high-torque stainless steel gear servos, offering faster response times and stable output. The robot's legs use a link structure design combined with inverse kinematics algorithms to enable coordinated multi-joint movement and precise motion control.

【AI Vision Recognition & Tracking】 PuppyPi features a high-definition camera that enables a variety of AI vision capabilities, including color recognition, target tracking, face detection, ball kicking, line following, and MediaPipe gesture control.

【Lidar & Robotic Arm Expansion】 PuppyPi quadruped robot supports TOF Lidar and robotic arm expansion. It can perform 360° environmental scanning, SLAM navigation, and dynamic obstacle avoidance. Additionally, Hiwonder PuppyPi can precisely grasp objects, opening up opportunities for advanced AI applications.

Hiwonder

Hiwonder Raspberry Pi 5 Robot Car MentorPi M1 Mecanum-wheel Chassis 2DOF Monocular Camera ROS2-HUMBLE Support SLAM and Autonomous Driving

【Raspberry Pi 5 & ROS2 Robot Car】 MentorPi M1 robot, powered by a RPi 5 and compatible with ROS2, is programmed in Python, making it an excellent platform for developing AI robots.

【Built for Performance】 With closed-loop encoder motors, a TOF lidar, a 2DOF camera, and high-torque servos, the MentorPi car ensures optimal performance and efficiency for all your robotics projects.

【Unlock Advanced AI】 Explore a wide range of AI applications with features that include SLAM mapping, path planning, multi-robot coordination, vision recognition, and target tracking.

【Learn Autonomous Driving】 Utilize the YOLOv5 model for training the robot to recognize road signs and traffic lights, helping you explore and develop cutting-edge autonomous driving technologies.